Post-Graduate Program in Cloud

Computing

Build Your Career Skill Set With Cloud Computing

- Live Mentorship-Driven Program

- 6 Months Online

With 6 Week Microsoft Azure Administrator Training Program

OPTIONAL360˚Understanding of Cloud Ecosystem

AWS

Amazon Web Services

Azure

Windows Azure

GCP

Google Cloud Platform

1000+ Careers Transformed

Ashok Kumar Natarajan

Senior Technical Account Manager

Awesome learning model and process helped me clear the elaborate interviews of Amazon

Sandhyarani Leishnagthem

Business System Analyst/PM

Well-structured program outline that covers the modules that I was looking for : AWS, AZURE, GCP, DevOps Containers

Omar A Omar

Site Reliability Engineer

The program led me to earn 2 professional certificates, AWS Solutions Architect Associate and Azure -900 certificate.

Elmer Ibayan

Consultant

Taking this course gave me a better picture of the actual cloud landscape

Hannan Javid

Excellent opportunity for the IT professional looking to upscale their career path

Amit Kashikar

Data Governance Analyst/Architect

This course helped me transition at cloud based data services

Kunal Kaul

Data Analyst

I was able to grab a new job with one of the big finance company in Melbourne, Australia for a cloud project

Don Tuaro

Product Owner and Engineer

Dedicated program support helped me stay on track & get Certified

Anson Thomas

Principal Site Reliability Engineer

One of the most rewarding programs, with hands-on labs and projects with live weekend mentor sessions

Rigo Pedraza

Information Technology Project Manager

This program along with training definitely did help me to find a new job

Roshini G

Associate Technical Support Engineer

I got hands-on experience in whatever I learnt

Uzma Jilani

Forward Deployed Engineer

I started passing interviews for cloud data engineering roles & landed a new job

What makes the UT Austin Cloud Computing Program Unique

90+ cloud services covered in curriculum

Learn in-depth cloud technology with in-demand tools and software, offered by AWS, Azure and Google Cloud.

VIEW CURRICULUM

Get trained for the AZ-104 Certification

Opt for the Microsoft Azure Training Program with the Post Graduate Program in Cloud Computing for a reduced cost

Live mentorship

sessions

A certified professional from the industry mentors you through the entire program.

VIEW EXPERIENCE

Networking and program

support

A dedicated program manager guides you through any issue related to the program — dates, assignments, fees, and application.

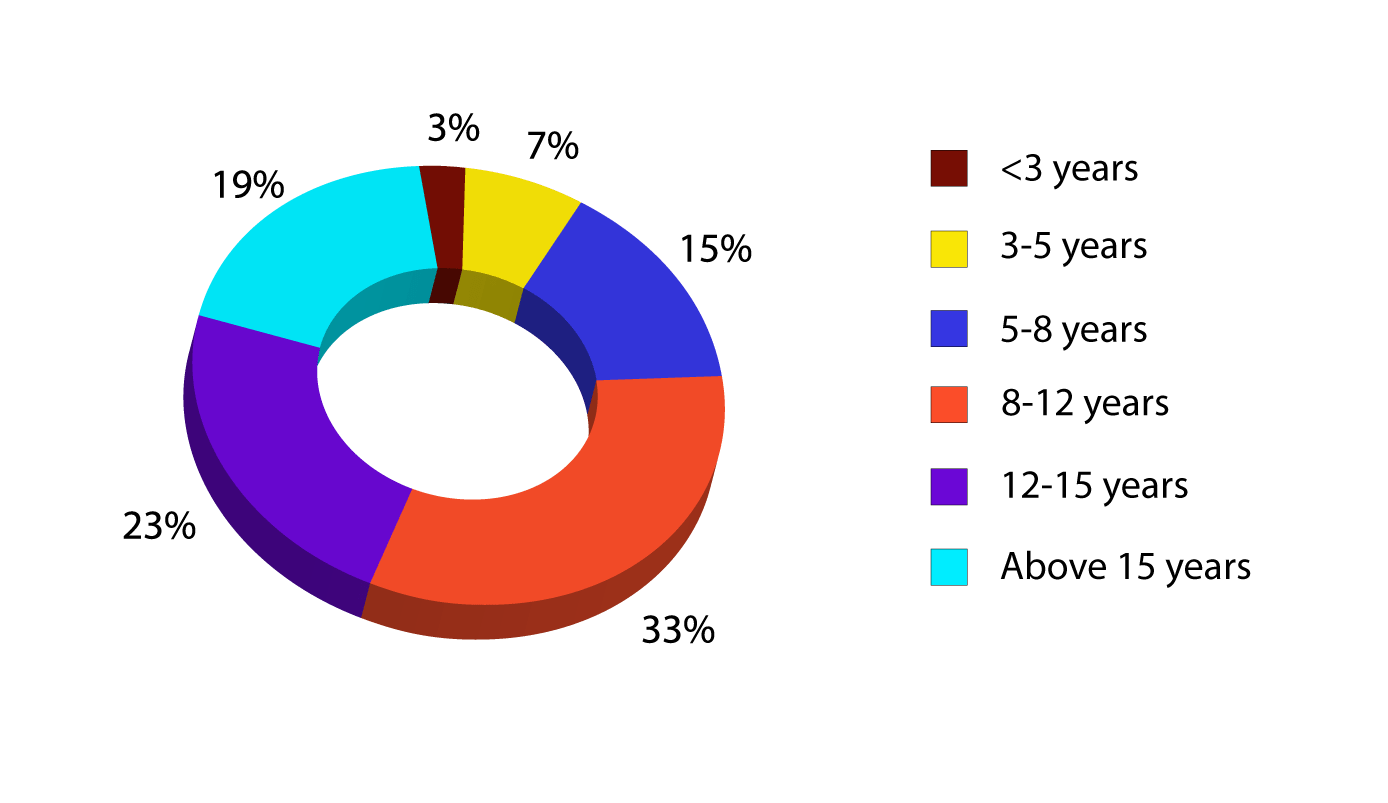

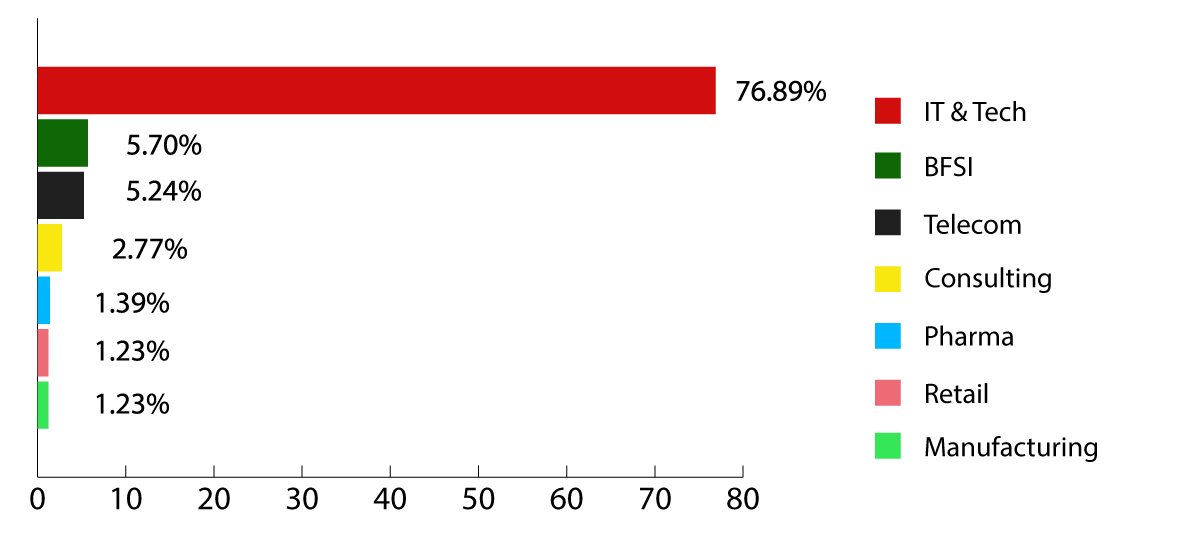

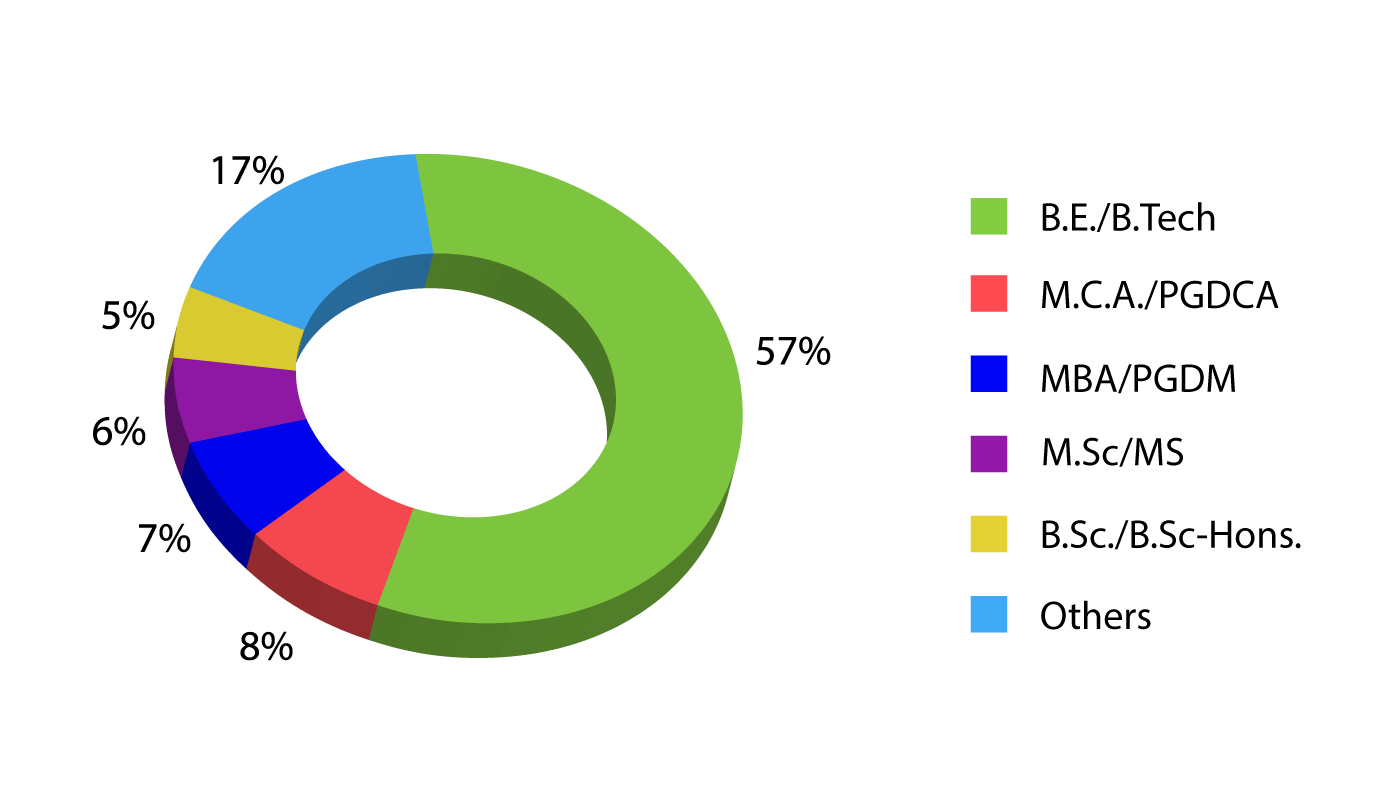

VIEW BATCH PROFILE

Dedicated career

support

As leaders in the technology space, we provide personal mentors, networking and program support, and a university-grade syllabus.

Join the Most Comprehensive Cloud Computing Program & Transform your Career

Cloud Computing Completion Certificate

#6 Information Systems Graduate Programs, U.S. News & World report, 2022

#6 Executive Education - Custom Programs, Financial Times, 2022

For any feedback & queries regarding the program, please reach out to us at utcloudcomputing@mygreatlearning.com

#6

Executive Education - Custom Programs

Financial Times,

2022

#6

Information Systems Graduate Programs

US News & World Report, 2022

Comprehensive Curriculum

PG Program in Cloud Computing has the most comprehensive curriculum with 90+ services being covered.

300+ hrs

Learning content

90+

Cloud services

Understand the evolution of cloud computing and learn basics of cloud computing before learning the concept of elasticity in the cloud pricing model and an exploration of the ever-expanding landscape of managed services.

- Learn the basic concepts of cloud computing

- Become familiar with the history and evolution of cloud computing

- Understand service, delivery and subscription models

- Review the classical enterprise, cloud cost economics and service offerings

- Get introduced to virtualization and its correlation with cloud computing

- Learn about cloud service taxonomy and next generation virtualization methods

Delve into the core building blocks of data center operations, covering Compute or VM, Storage, and Networking and understand the concepts of Infrastructure as a Service, block and object-based storage.

- Become familiar with the terminologies & concepts of AWS and acquire proficiency in working with AWS

- Gain a comprehensive understanding of the various services offered by AWS public cloud platform

- Dive into the specifics of compute, storage, and network components within AWS.

- Implement generic cloud computing concepts through hands-on experience on the AWS platform.

- Develop the ability to create cloud cost projections using the AWS cost calculator.

- AWS global infrastructure

- EC2, Load Balancers, AMI

- EBS, S3, EFS, Volumes, Snapshots

- VPC, NAT, Bastion Hosts, Route53

- Identity & Access Management

- Cost Calculator

Learn the key concepts of cloud-managed services- managed databases, caching, messaging, and serverless functions and gain expertise in deployment and monitoring on public clouds, containerization, and DevOps concepts.

- Understand cloud managed services and gain insights into deployment & monitoring of resources work on a public cloud

- Learn to write serverless functions

- Learn the concepts of containerization, docker container system, and deploy applications.

- Learn the basics of DevOps and explore each phase of DevOps through demonstrations.

- Acquire skills to create a DevOps plan, choose the right tools and implement each phase to achieve CI/CD.

- AWS RDS

- AWS Elasticache

- AWS CloudWatch

- SQS, SNS

- AWS Lambda

- Container - Docker

- AWS ECS

- DevOps on AWS

- CI/CD

- Application deployment

- AWS Cloud Formation

Explore a unique perspective on cloud computing, specifically comparing Azure with AWS. Building upon concepts from previous modules, understand infrastructural services on Azure and gain insights into PaaS (Platform as a Service) deployments on the Azure.

- Understand basic concepts of Azure Cloud Platform

- Learn Azure Compute Infrastructure

- Learn about Network & Operations

- Understand Azure Serverless Computing

- Introduction to Azure and its Services

- Azure Virtual Machines

- Network Security

- Load Balancing

- Virtual Networking

- Azure Storage

- Azure App Services

- API Management

- Container Apps

- Azure Functions

Dive into Azure's managed services in this module, exploring databases, DevOps, messaging, and more. The key takeaways include insights into Azure Data Implementation, Network & Operations, Azure Cognitive Services, Security & Governance, and Azure DevOps.

- Learn about Azure Data Implementation

- Learn about Network & Operations

- Understand Azure Cognitive Services

- Learn about Azure Security & Governance

- Understand Azure DevOps

- Azure Active Directory

- Azure AD Connect

- Security & Governance

- Azure SQL Database

- Cosmos DB

- Messaging & Event Based Services

- Data Services Intro

- Building ARM Templates

- Azure DevOps

- Cost Management & Monitoring

Enhance your knowledge of cloud computing with self-paced courses covering a wide range of topics, such as big data, microservices, Google Cloud Platform, and many more.

Concepts: Cloud Adoption and Migration, Cloud security on AWS

Concepts: EMR, Hadoop, Spark

Concepts: Basic Constructs, Interservice Communication, Operations, Failure Handling, 12-factor App, Load Balancing, Event Driven Architecture, Reactive Extensions, Logging, and security.

Concepts: Cloud- based Development Environment, Data Streaming and Data Analytics on Cloud, Kinesis Data Stream, Elastic Beanstalk, and Kubernetes.

Concepts: Overview of GCP, Google Compute Engine, Instance Groups, Autoscaling, Load Balancers, Storage, VPC, Google App Engine, Cloud SQL, Cloud Datastore, Spanner, and Google Kubernetes Engine.

Concepts: Azure Active Directory, Key Vault, Azure Governance services, Managed Identities and Azure Governance services

You will get your hands full with various real-world projects under the guidance of industry experts. You will implement projects in the fields of hospitality, inventory management, and many more.

After successfully completing the course, you can secure a Post Graduate Certificate in Cloud Computing from the University of Texas at Austin. This program’s exhaustive curriculum nurtures you to become a highly skilled Cloud Computing professional to help you get your desired career growth at top global companies.

Upon successfully finishing the program, you will receive a Program Completion Certificate jointly issued by Microsoft and Great Learning.

- Configure Azure resources

- Manage Azure tasks

- Manage Azure AD objects

- Manage access control

- Manage Azure subscriptions and governance

- Configure access to storage

- Manage data in Azure storage accounts

- Configure Azure Files and Azure Blob Storage

- Automate deployment of resources by using templates

- Create and configure VMs

- Create and configure containers

- Create and configure an Azure App Service

- Configure virtual networks

- Configure secure access to virtual networks

- Configure load balancing

- Monitor virtual networking

- Monitor resources by using Azure Monitor

- Implement backup and recovery

Languages and Tools covered

Build Job-relevant Skills with Hands-on Projects

Transform theoretical knowledge into tangible skills by working on multiple hands-on exercises under the guidance of industry experts. Below are samples of potential project topics:

1000+

Projects completed

22+

Domains

Learn from the Best of Academia

Learn from leading academicians in the field of Cloud Computing and several experienced industry practitioners from top organisations.

Dr. Kumar Muthuraman

Faculty Director, Centre for Research and Analytics

Mr. Nirmallya Mukherjee

Former Chief Architect, Dell

Mohit Batra

Founder

Antony John Paul

Platform Engineering Solution Architect, Omnicell

Sachin Trivedi

Enterprise Cloud Architect,AWS

Samuel Baruffi

Senior Solutions Architect,AWS

Sreeharsha Nippani

Senior Manager, Solution Architecture (WWPS / Federal Financials)

Jitendra Mishra

Sr. Cloud Solution Architect (VP)

Mr. Sachin Agrawal

Consultant, Microsoft

Learner Testimonials

"I chose PG Program in Cloud Computing because the curriculum provides a good balance between theory, concepts, practice labs & loads of problem statements to work on. This course also provides a lot of reference material and mock exams to help you prepare for the AWS and Azure certifications.

Divya G Sekhar

Senior Tech Consultant, E&Y (India)

"I got exactly what I was expecting from the program and I will always cherish this experience. And I want to thank Nirmallya for delivering the content in such a wonderful way.

Krishnan L Narayan

Watson IoT Services Leader, IBM (United States)

"I can work independently on my own on projects, anything involved with the AWS. We started learning about the basics and then moved on to advance. It is an interactive program, and you feel like you are in a classroom.

Nada Khamis

Senior Cloud Engineer, Edgeunity (United States)

"The industry-relevant course added a lot of value. It added to my capabilities and the overall proposition that I can give back to my company. Even my manager acknowledge my skills in this domain.

Sugam Sharma

Telecom Market Lead, Nokia (North America)

"I chose PG Program in Cloud Computing out of all other programs in the market because of its course content. It has a detailed curriculum. After completing the course, I was more confident technically & now I can contribute a lot in delivering solutions to the customers.

Debanjan Dey

Senior Project Manager, Tavant (India)

"This course gave me a great sense of confidence. I chose PG Program in Cloud Computing because of great reviews & feedback I had heard from people. It was a great learning experience for me.

Amrit Raj

Java Developer, IBM (India)

Program Fees

Postgraduate Program in Cloud Computing

- Personalized weekend online mentoring sessions

- 6 months fully online program

- 300+ hours of overall learning

- 15+ real-world industry relevant projects

- 90+ services covered in the curriculum

- Live doubt-clearing with expert mentors

- Globally recognized certificate from the University of Texas at Austin

Postgraduate Program in Cloud Computing + Microsoft Azure Administrator Training Program*

- All features in Postgraduate Program in Cloud Computing

- 6 Weeks Training

- Dedicated Program Manager

- Live Teaching Sessions with Microsoft Certified Trainers

- Up-to-date Question Bank

- 2 Mock Exams

- Access to official Microsoft content

*Great Learning delivers the Microsoft Azure Administrator Training Program in collaboration with Microsoft. The University of Texas at Austin is not involved in the program's design or delivery.

Start learning cloud-computing with easy monthly installments, with flexible payment tenures as per your convenience. Reach out to the admissions office at +1 512 645 1416 for more details.

*Subject to partner approval based on regions & eligibility. dLocal for Brazil, Colombia & Mexico learners. Other partners for U.S. learners only.

Application Process

Fill the application form

Apply by filling a simple online application form.

Interview Process

Go through a screening call with the Admission Director’s office.

Join program

An offer letter will be rolled out to the select few candidates. Secure your seat by paying the admission fee.

Upcoming Application Deadline

Admissions are closed once the requisite number of participants enroll for the upcoming cohort . Apply early to secure your seat.

Deadline: 25th Apr 2024

Apply Now

Reach out to us

We hope you had a good experience with us. If you haven’t received a satisfactory response to your queries or have any other issue to address, please email us at

help@mygreatlearning.comBatch Start Dates

Online

To be announced

Frequently Asked Questions

At the end of the Cloud Computing program, you will

- Be able to oversee a company's cloud adoption plans, cloud application design, and cloud architecture.

- Be able to design and implement enterprise infrastructure and platforms required for cloud computing.

- Be able to analyze system requirements and ensures that systems will be securely integrated with current applications.

- Develop the ability to architect a cloud environment and make sound component choices.

- Become comfortable working with virtual machines (VM) and the nuances of the most popular tools.

- Build the ability to use NIST Cloud Reference Architectures to solve various problems faced as a cloud professional.

- Understand trade-offs, cost implications and choose the right cloud services for you.

- Understand Containers and learn how to work efficiently with Dockers

- Use your knowledge of cloud services to suggest and implement cloud-based solutions for your clients and technology teams.

- Build your professional toolkit to become an effective DevOps professional.

UT Austin's PG Program in Cloud Computing goes well beyond preparing you for AWS. From the fundamentals of Cloud Computing to advanced concepts, the course helps you gain a strong conceptual understanding of the entire cloud ecosystem. You can Watch this video to learn more.

The faculty members teaching in the PG Program in Cloud Computing from UT Austin are industry experts and leaders in their respective areas of expertise and have decades of professional experience and wisdom. Renowned experts from academia also participate.

UT Austin’s Cloud Computing Program includes various real-time projects and a detailed capstone project. You will analyze a real-world problem using a range of tools & techniques that you have learned in live classes. Learners can also participate in a 4-week optional capstone project that tests their knowledge acquired throughout the program.

Virtual labs are real cloud environments which you will be using in this program, not a simulation. For AWS, you will get an AWS lab account with promotional credits. For Azure, you will be guided on how to create a Free tier Azure account.

No. The content is not downloadable. However, we believe that learning should be continuous and hence, all the learning material in terms of lectures and reading content would be available to you on the LMS even after the completion of the course.

UT Austin’s Cloud Computing course is a holistic and rigorous program and follows a continuous evaluation scheme for your hands-on work. While you receive feedback on your lab submissions, your performance in projects will be graded.

You are provided career support through resume workshops and interview preparation sessions for cloud computing job roles. Relevant career opportunities with leading companies are also shared with you.

Live mentored are interactive Q&A sessions conducted on weekends for students to clarify their doubts with faculty and connect with other students for knowledge sharing. You can re-attend the missed session in the form of a recorded video.

All virtual sessions are conducted through Zoom, an online collaboration platform. During mentor sessions, using the chat feature, you can ask questions directly to faculty members, and share messages and useful links with your classmates as well.

All projects are mandatory evaluation components.. If you fail to complete a project, it is important that you repeat it.

Candidates should have at least 3+ years of experience in an IT & technology role which would include exposure to development, testing/quality assurance, maintenance, database administration and technology infrastructure management.

You should have an understanding of IT Services Management. It is essential to know the basic concepts related to operating systems, like Windows, and Linux. Also, prior exposure to public or private cloud environments, or infrastructure and network management is a plus.

No. The PG Program in Cloud Computing by UT Austin trains you for the AWS certification, but you'll have to get the certification separately. You will be trained to clear AWS Certified with AWS Solutions Architect - Associate Certification and build a strong foundation for other AWS certifications for SysOps and Developer. You will also get additional resources containing material which can help you crack the AWS and Azure certifications.

Please click to see the Course Fee Structure. The Admissions office will help you in applying for loans once you receive the offer of admission. Our payment partners include Paypal, Affirm, and Uplift We ensure money is not a constraint in the path of learning.

Yes. You can pay the course fee in 2-3 easy installments. If you make a one-time full payment you would be entitled to a discount. Please check with the admissions office for more details.

- All interested candidates are required to apply to the Post Graduate Program in Cloud Computing by filling up an Online Application Form.

- Applicants meeting the eligibility criteria receive a screening call

- The applications are carefully reviewed on the basis of the screening call and the applicant’s profile

- The admission offer letter is released after a successful review of the application.

Related Programs for you

Still have queries? Contact Us

Please fill in the form and an expert from the admissions office will call you in the next 4 working hours. You can also reach out to us at utcloudcomputing@mygreatlearning.com or +1 512 645 1416

Download Brochure

Check out the program and fee details in our brochure

Oops!! Something went wrong, Please try again.

We are allocating a suitable domain expert to help you out with your queries. Expect to receive a call in the next 4 hours.

Not able to view the brochure?

View Brochure