Introduction to Hadoop 3.0

Learn about the basics of Hadoop with this free Hadoop course. Get introduced to the architecture and components of Hadoop and start your journey in the world of Big Data. Enroll now for free!

What you learn in Introduction to Hadoop 3.0 ?

About this Free Certificate Course

In this advanced course on Hadoop , the most prevalent big data tool you get introduced to the latest version of Hadoop. The course starts with the introduction to Hadoop 3.0 which is the curent version of Hadoop. You will start with the installation of 3.0 to begin with. Once you have mastered the installation of Hadoop 3.0, you will get introduced to uniqueness of Hadoop in the course. The course concludes with you getting introduced to HDFS in Hadoop 3.0 which has few changes with respect to Hadoop 2.0.

With this course, you get

Free lifetime access

Learn anytime, anywhere

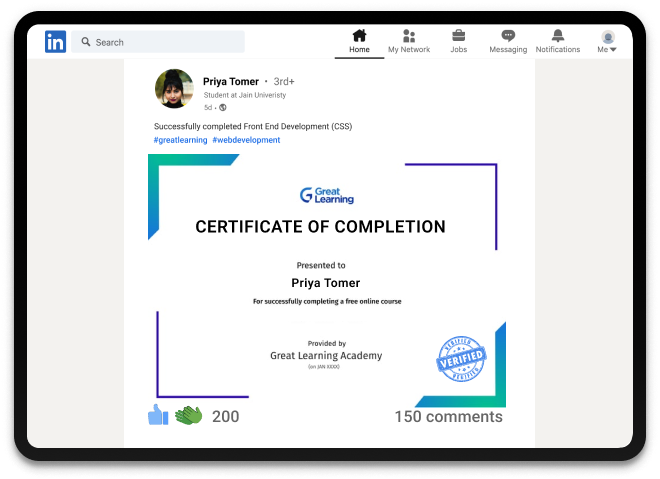

Completion Certificate

Stand out to your professional network

2.5 Hours

of self-paced video lectures

Success stories

Can Great Learning Academy courses help your career? Our learners tell us how.And thousands more such success stories..

Related Big Data Courses

Popular Upskilling Programs

Explore new and trending free online courses

Hadoop is an open-source software framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It was created by the Apache Software Foundation and is written in Java.

Hadoop provides a reliable and scalable platform for storing and processing large and complex data sets, making it a popular choice for organizations that deal with big data. The Hadoop ecosystem includes several components, including the Hadoop Distributed File System (HDFS) for storing data, MapReduce for processing data, and YARN for managing resources.

Hadoop is used in various industries, such as finance, healthcare, retail, and social media, to gain insights from large and complex data sets. It is also commonly used in fields such as machine learning, data mining, and predictive analytics. With its ability to handle large amounts of data, Hadoop has become an essential tool for organizations to make data-driven decisions and stay ahead in the competition.

Hadoop is used to store and process large and complex data sets that traditional database management systems are not able to handle. It is designed to handle big data in a distributed environment across clusters of computers, providing a reliable, scalable, and cost-effective solution for storing, processing, and analyzing large data sets.

One real-world example of Hadoop being used is in the retail industry. Retail companies generate vast amounts of data from multiple sources such as sales transactions, customer behavior, and supply chain data. Hadoop can be used to store and process this data, and provide valuable insights into customer buying patterns, inventory levels, and market trends. This information can then be used to make informed business decisions, such as adjusting product pricing and optimizing inventory management.

Another example is in the finance industry, where Hadoop can be used to analyze large amounts of financial data, such as stock prices and market trends, to identify investment opportunities and minimize risk. Hadoop can also be used in the healthcare industry to store and process large amounts of patient data, and provide insights into disease diagnosis and treatment options.

These are just a few examples of how Hadoop is being used in various industries to handle big data and provide valuable insights. Its ability to store and process large amounts of data efficiently and effectively makes it a popular choice for organizations looking to stay ahead of the competition.

If you are looking to understand Hadoop and the world of big data, this free Hadoop course is a great place to start. This course provides a comprehensive introduction to Hadoop and its components, such as HDFS, MapReduce, and YARN, and how they work together to store, process, and analyze big data. With the help of interactive examples, hands-on exercises, and real-world scenarios, you can develop a solid understanding of Hadoop and start your journey in the world of big data.